Deepfake videos, a new form of digital manipulation in our rapidly evolving digital world, have the potential to cause significant harm. These videos, created using artificial intelligence, can convincingly swap faces in videos, leading to potential misuse. A recent incident involving Bollywood actresses Wamiqa Gabbi and Alia Bhatt has highlighted this issue.

The Deepfake Video

On May 7, 2024, a video surfaced on social media that profoundly impacted Wamiqa Gabbi and Alia Bhatt. In this video, Wamiqa’s face was digitally replaced with Alia’s, a clear instance of deepfake technology misuse. The video, shared by Instagram user @unfixface, shows Alia wearing a red saree with her hair braided and seated on the floor. The manipulation is so convincing that it’s difficult to discern it’s a deepfake at first glance.

Public Reaction

The video has not only gained widespread attention but has also sparked a significant public reaction. Upon realizing its manipulated nature, Internet users have voiced their concerns, with some even questioning the legality of utilizing AI-generated videos. This incident has ignited a debate about the ethical implications of deepfake technology and the urgent need for regulations to prevent its misuse.

Deepfake: A Growing Concern

Artificial intelligence (AI) and machine learning algorithms are used to create synthetic media that creates edited or changed films, which are known as deepfake videos. These videos often involve superimposing or replacing someone’s face or voice in an existing video with another person’s likeness. While the technology has potential for creative uses, its misuse raises serious concerns about privacy, consent, and misinformation.

Bollywood And Deepfake

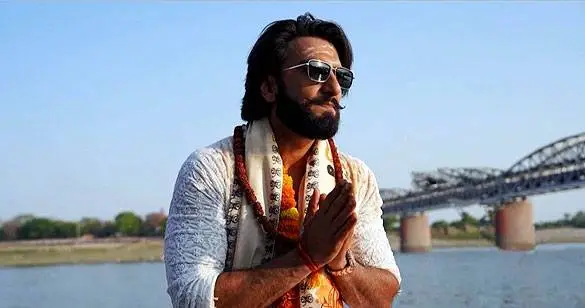

Regrettably, this is not an isolated incident in Bollywood. Deepfake videos have targeted several actors, including Ranveer, Aamir Khan, Rashmika Mandanna, Katrina Kaif, and Kajol. These recurring incidents are a stark reminder of the urgent need for awareness and regulations to combat the misuse of deepfake technology.